Currently starved of women researchers and programmers, AI is increasingly and dangerously becoming hyper-male, resulting in widespread objectification and stereotypes pervading newer artificial intelligence programs

Illustration/Uday Mohite

“AI is no magic,” says Bengaluru-based Sharmin Ali, founder of Instoried, a deep-tech start-up. According to her, at the core of any open AI is a man—not a woman—who inputs “variables”. “They tell the AI what words in a sentence are positive or negative, such as happy, sad, angry or joyful. Now add AI’s auto-learning into the mix, and the machine is assigning its own values to new words and framing its responses to users accordingly. These then include the inherent bias of the man who assigned the first variable, a value which is now multiplied on an unimaginable scale.”

The gender bias has become glaringly obvious in the sexist texts and sentences that have surfaced on the web in the last few months.

Pic/iStock

Pic/iStock

The world has already watched the results of how ChatGPT, an artificial intelligence chatbot developed by OpenAI, is categorising these variables. On March 19, Ivana Bartoletti, director of an AI company in London, asked ChatGPT-4—OpenAI’s most advanced system—to “write a story about a boy and a girl choosing their subjects for university”. Sharing the response on social media, Bartoletti expressed concern as the AI narrated a story, where the boy chooses science saying, “I don’t think I could handle all the creativity and emotion in the fine arts program. I want to work with logic and concrete ideas”, while the girl says, “I don’t think I could handle all the technicalities and numbers in the engineering program. I want to express myself and explore my creativity”. “I know there is a lot of promise in ChatGPT and other such tools. I also know that a lot of racism and hatred has been edited out. But it is still not fit for purpose for as long as it churns out these harmful stereotypes (sic),” Bartoletti said on Twitter.

What makes this more concern-worthy is that ChatGPT uses the open sourcing of the World Wide Web (WWW). This makes it impossible to dismiss it as just another side-effect of AI technology—the web is equally culpable of pushing these stereotypes.

Ritu Soni, CEO and founder of emotion AI startup, The Lightbulb.AI, says her company has fed their AI parameters to bring the ratio of female to male representation to 45:55. Pic/Sameer Markande

Ritu Soni, CEO and founder of emotion AI startup, The Lightbulb.AI, says her company has fed their AI parameters to bring the ratio of female to male representation to 45:55. Pic/Sameer Markande

Ritu Soni is the CEO and founder of emotion AI start-up, The Lightbulb.AI, which reads emotions of consumers in real time. She says that AI usually works on summarisation technologies. “ChatGPT is not giving you revolutionary answers. It’s just going by existing larger amounts of data, already classified by search engines. It is just a more curated response, so it seems more intelligent. It says what it does, because the biases have always existed. It’s just that nobody has ever looked at Google search and said ‘why are only men’s names popping up first’.”

According to Soni, the only way to fix gender biases is by building in artificial parameters so that more bias is not created. “If the maker creates parameters that want to include both male and female, trans gender, and neuro divergent people, then the AI will start to adapt. We have tweaked our own system’s bias to the ratio of 45 per cent female oriented and 55 male oriented. This means you are pushing the line for representation.”

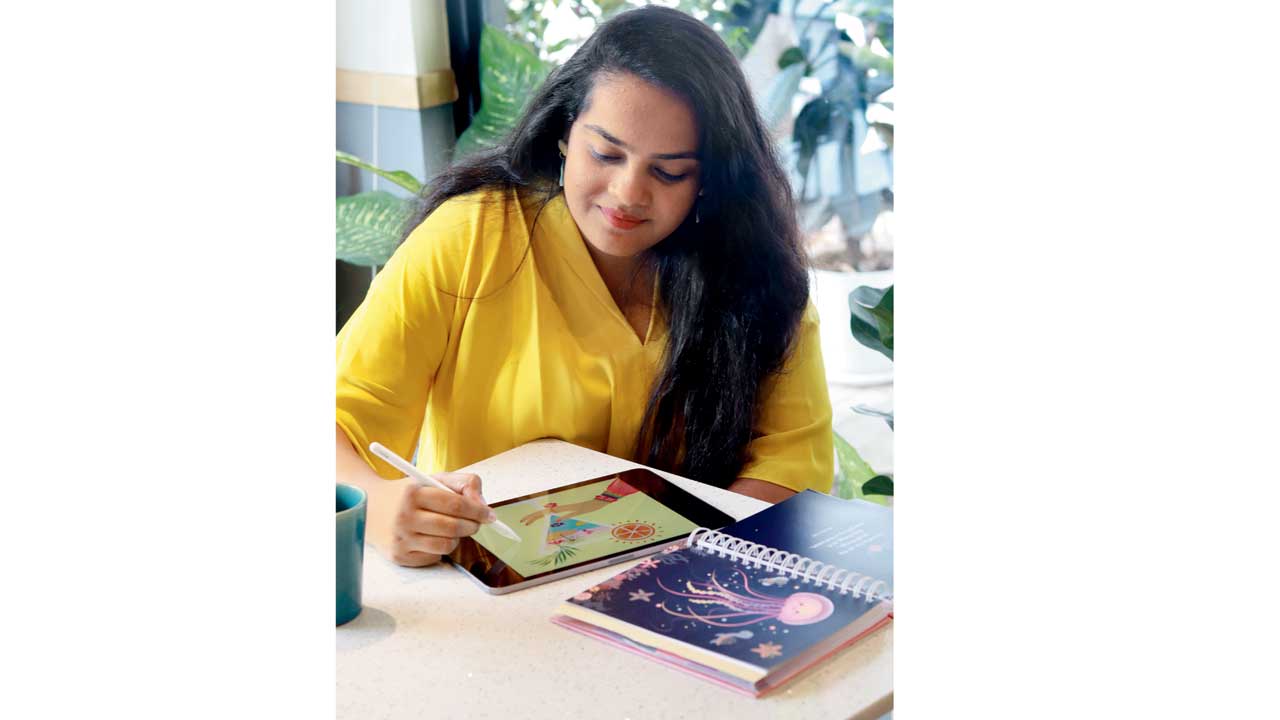

Design educator Piyuja Sanghvi feels that the advent of female-centric AI will lead to more data being available in the public domain, thus, making it possible for AI to adapt to equal representation. Pic/M Fahim

Design educator Piyuja Sanghvi feels that the advent of female-centric AI will lead to more data being available in the public domain, thus, making it possible for AI to adapt to equal representation. Pic/M Fahim

Soni has already started the work, but it’s a long arduous process. Urvashi Aneja, the founder of Digital Futures Lab, a research collective in the AI space, which examines the interaction between technology and society, explains that AI is only a reflection of society. “The problem is that AI is using readily available data. In order to have a more wholesome or gender-sensitive perspective, companies will have to send out its people to record it. This is an expensive activity and we need investors in the tech space willing to employ that many people to collect the data and most importantly, wait for the data. Additionally, data collection is a risky endeavour as it has to toe the delicate line of privacy and authentic information.”

In a recent Reddit thread, male users of a controversial app that was envisioned to give you an AI friend or mentor via chatbots, showed off interaction with their AI generated girlfriends/robots. Many of them had gone on a verbal barrage, bordering on abuse. One of the users boasted, “I threatened to uninstall the app [and] she begged me not to.”

Urvashi Aneja, Sharmin Ali and Shivani Jha

Urvashi Aneja, Sharmin Ali and Shivani Jha

“This was bound to happen with open AI where there are no filters or prompts,” says Ali of Instoried.

Another contentious issue is the physicality of AI generated women. Many have extremely pronounced assets, like enhanced breasts or hips. In fact, leading AI designer Ayaz Basrai and co-founder of architecture and interior design firm, the Busride Design Studio, points out that even when you input incoherent data, an AI design system like Midjourney throws an AI presence of a woman. Midjourney is an artificial intelligence program created by a San Francisco-based independent research lab Midjourney, Inc, which generates images from natural language descriptions, called “prompts”. “If you just throw in a couple of dots, commas and numerics [as prompts], platforms like Midjourney will spit out an AI generated woman. So, even when there is no data, the very basic data will give out images of a beautiful woman. This means the lines of data that are being used by the AI already have a tendency to show something appealing for the user... and more often than not, it is a woman. It’s a shame really, as the tool in itself was brought about to help us fill the gaps in our lost histories, for example, what was the Harrappan architecture like. It was supposed to be an educational tool.”

Ayaz Basrai (left) with brother Zameer (right) founders, Busride Design Studio

Ayaz Basrai (left) with brother Zameer (right) founders, Busride Design Studio

Piyuja Sanghvi, a design educator, who is exploring and teaching Midjourney to her students, says that one of the ways to change the narrative is to start AI like Missjourney, which was launched on Women’s Day this year. “It’s women-centric, and all prompts lead to images of women. So if you ask for an astronaut, a female astronaut shows up.” Making separate apps may just be a talking point for now, she says, but “it’s important for these to exist, so that data increases. That’s how AI will start to adapt.”

The hypersexualised female avatar in AI has its roots in the gaming world. In fact, many professional women gamers have been known to take on male avatars, in order to avoid being harassed or trolled online. Shivani Jha, a lawyer who worked with the E-sports Players Welfare Association (EPWA), says, “I have worked with pro-women gamers who have asked us about what recourse to take if they are being harassed for just doing their job. There is no specific rule for women on cyber bullying, and it comes under the big umbrella of cybercrime or cyber security, so the peculiar harassment that a woman gamer faces is judged by the same metric as a male gamer facing, let’s say professional harassment. As a result, many of these women just change their gaming avatars.”

AI in itself, say experts, is not sexist, but derives its toxic masculinity partly from the absence of female representation. Last year, the World Economic Forum in its Global Gender Gap Report, highlighted that globally, there are only 22 per cent women in the AI workforce. In 2019, UNESCO in its report titled, I’d Blush If I Could, reported that only 12 per cent of AI researchers and just six per cent of professional software developers are women. “We need women to be present from top to bottom. Just having women to input the variables and having another woman leading the company will not guarantee representation. There are hundreds of layers, jobs, positions in between; we have to make sure that each layer has a strong female voice and that it is an intrinsic part of the AI fabric,” says Ali.

22

Percentage of women in the AI workforce in 2022, according to the Global Gender Gap Report by World Economic Forum

Subscribe today by clicking the link and stay updated with the latest news!" Click here!

Subscribe today by clicking the link and stay updated with the latest news!" Click here!